Intuiface's Support for OpenAI's GPT, DALL-E, and Whisper Large Language Models

We've just introduced native support for OpenAI's GPT, DALL-E, and Whisper large language models. Let's take a look at how the work and the implications.

Overview

There are endless examples of "game changing" technology that ultimately failed to change the game. The Segway, Google Glass, Napster, Crypto.... Ok, the last one still has a shot to be something more than just gambling, and these other failures surely influenced the creation of successful alternatives. Nevertheless, the hype has overblown.

The hype is not overblown for Generative AI. It has already started to change the game. This article is about how you can start to take advantage of Generative AI in Intuiface.

What's new in Intuiface

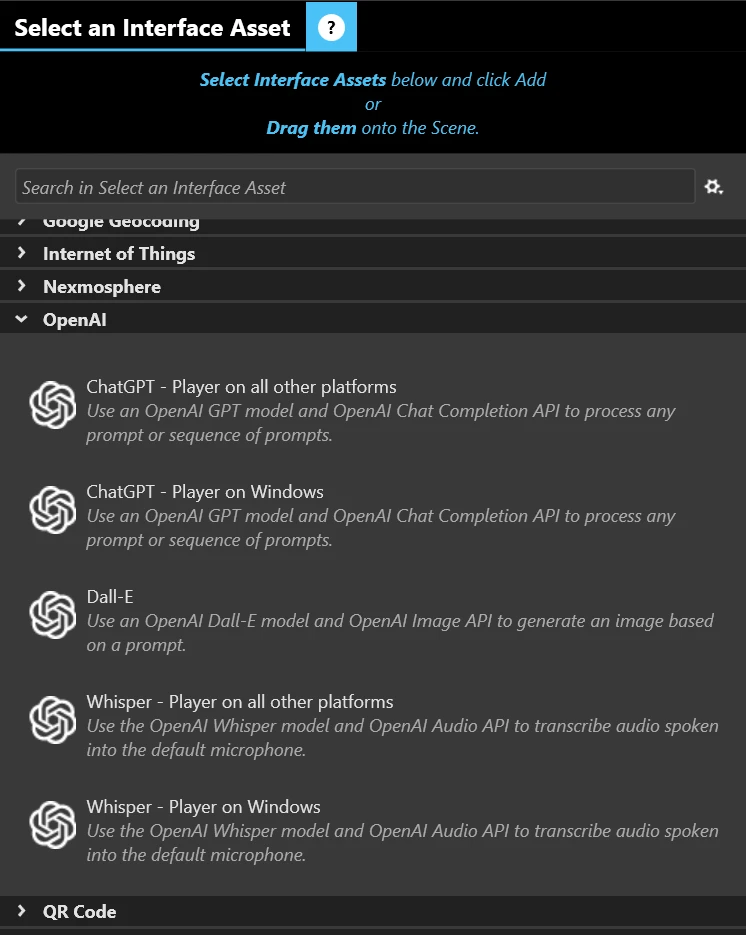

Composer now includes three new interface assets (IAs): ChatGPT, DALL-E, and Whisper

Here's what they enable:

- ChatGPT

Under the covers, this is the GPT large language model (LLM)- here's an article about GPT-4 - which means what you can do in Intuiface works just like ChatGPT. Send a prompt, get a response, and repeat if desired. You can ask anything, provide system guidance, and then parse the results to extract what you want. - DALL-E

Under the covers, this is the DALL-E large language model. Create an image based on any prompt. - Whisper

Under the covers, this is the Whisper large language model. It uses the default microphone to capture the spoken word and transcribe it into text. This text can be used to supplement a prompt sent to the ChatGPT or DALL-E interface assets - but you can use it however you wish.

You'll notice that for ChatGPT and Whisper, we have two versions of the interface asset. Our new Player technology - on all platforms but Windows - supports TypeScript-based IAs. This type of interface asset is not yet supported on Windows; we use .NET to build IAs for Windows. Functionally, these two variants of the IA are identical, but we had to code them to account for some API complexity. With DALL-E we were able to work directly with its Web API and thus used API Explorer. (Yes, you could have done this yourself.) IAs for Web APIs are universal across all Player platforms.

How did we build these new interface assets?

All of OpenAI's large language models are accessible through an API. Our interface assets were constructed to use those APIs:

- ChatGPT IA uses the OpenAI Chat Completion API to communicate with the underlying GPT LLM.

- DALL-E uses the OpenAI Images API to communicate with the underlying DALL-E model.

- Whisper uses the OpenAI Audio API to communicate with the underlying Whisper LLM.

Like all interface assets, we hide the underlying API complexity. All you see in Composer are properties, triggers, and actions.

Do I need an OpenAI account to use these interface assets?

Yes, you will need an OpenAI account. Specifically, you will need to supply each IA with your OpenAI API key.

An API key is acquired by creating an OpenAI account and then purchasing tokens. (Every prompt and LLM response consumes tokens, and those tokens have a cost.) Once you have an account and have purchased tokens, head to the OpenAI API Key page to find your key.

Here's something funky that you need to keep in mind: OpenAI will only show you your API key once, when it's originally created. You can NEVER see that key again. Be sure to copy your API key(s) to a safe place so you can reuse it across all of your experiences.

What is a super-cool example of how to use these interface assets?

Please go check out this fantastic example, an AI-driven wayfinder built by Tosolini Productions for the Museum of Flight in Seattle, Washington. It enables museum visitors to easily find exhibition areas that align with their interests by using natural language queries. It showcases how Intuiface can achieve this by utilizing Whisper as the input mechanism and specialized prompt engineering to instruct GPT to provide personalized recommendations.

Of particular note, check out the genius of their prompt. They are "teaching" GPT how to be a wayfinder for the museum. The objective of the wayfinder is defined, the constraints are clarified, and the results are structured. It's a perfect example of "natural language programming", which fits in with the whole no-code aesthetic of Intuiface.

Want to try something in Composer?